Asking RNNs+LTSMs: What Would Mozart Write?

Preamble: A natural progression beyond artificial intelligence is artificial creativity. I've been interested in AC for awhile and started learning of the various criteria that the scholarly community has devised to test AC in art, music, writing, etc. (I think crosswords might present an interesting Turing-like test for AC). In music, a machine-generated score which is deemed interesting, challenging, and unique (and indistinguishable from the real work of a great master), would be a major accomplishment. Machine-generated music has a long history (cf. "Computer Models of Musical Creativity" by D. Cope; Cambridge, MA: MIT Press, 2006).

Deep Learning at the character level: With the resurgence of interest in Recurrent Neural Networks (RNNs) with Long Short-Term Memory (LSTM), I thought it would be interesting to see how far we could go in autogenerating music. RNNs have actually been around in music generation for awhile (even with LSTM; see this site and this 2014 paper from Liu & Ramakrishnan and references therein), but we're now getting into an era where we can train on a big corpus and thus train a big, complex model. Andrej Karpathy's recent blog showed how training a character-level model on Shakespeare and Paul Graham essays could yield interesting, albeit fairly garbled, text that seems to mimic the flow and usage of English in those contexts. Perhaps more interesting was his ability to get nearly perfectly compilable LaTeX, HTML, and C. A strong conclusion is that character-level RNNs + LSTMs get pretty good at learning structure even if the sense of the expression seems like nonsense. This an important conclusion related to mine (if you keep reading).

Fig 1: Image of a Piano Roll (via http://www.elektra60.com/news/dreams-vinyl-story-lp-long-playing-record-jac-holzman). Can a machine generate a score of intererst?

Data Prep for training

While we're using advanced Natural Language Processing (NLP) in my company, wise.io, using RNNs is still very early days for us (hence the experimentation here). Also, I should say that I am nowhere near an expert in deep learning and so, for me, a critical contribution from Andrej Karpathy is the availability of code that I could actually get to run and understand. Likewise, I am nowhere near an expert on music theory nor practice. I dabbled in piano about 30 years ago and can read music, but that's about it. So I'm starting this project on a few shaky legs to be sure.

Which music? To get train a model using Karpathy's codebase on GitHub, I had to create a suitable corpus. Sticking a single musical genre and composer makes sense. Using the fewest number of instruments seemed sensible too. So I chose piano sonatas for two hands from Mozart and Beethoven.

Which format? As I learned, there are over a dozen different digital formats used in music, some of them more versatile than others, some of them focused on enabling complex visual score representation. It became pretty clear that one of the preferred modern markup of sophisticated music manipulation codebases (like music21 from MIT) is the XML-based MusicXML (ref). The other is humdrum **kern. Both are readily convertible to each other, although humdrum appears to be more compact (and less oriented towards the visual representation of scores).

Let's see the differences between the two formats. There's a huge corpus of classical music at http://kern.humdrum.org ("7,866,496 notes in 108,703 files").

Let's get Mozart's Piano Sonata No. 7 in C major (sonata07-1.krn)

!curl -o sonata07-1.krn "http://kern.humdrum.org/cgi-bin/ksdata?l=users/craig/classical/mozart/piano/sonata&file=sonata07-1.krn"We can convert this into musicXML using music21:

import music21

m = music21.converter.parse("ana-music/corpus/mozart/sonata07-1.krn")

m.show("musicxml")The first note in musicXML is 11 lines with a length of 256 relevant characters

<part id="P1">

...

<note default-x="70.79" default-y="-50.00">

<grace/>

<pitch>

<step>C</step>

<octave>4</octave>

</pitch>

<voice>1</voice>

<type>eighth</type>

<stem>up</stem>

<beam number="1">begin</beam>

</note>

...

</part>where as the first note in humdrum format is a single line with just 14 characters:

.\t(32cQ/LLL\t.\nBased on this compactness (without the apparent sacrifice of expressiveness) I chose to use the humdrum **kern format for this experiment. Rather than digging around and scraping the kern.humdrum.org site, I instead dug around Github and found a project that had already compiled a tidy little corpus. The project is called ana-music ("Automatic analysis of classical music for generative composition").It compiled 32 Sonatas by Beethoven in 102 movements and 17 Sonatas by Mozart in 51 movements (and others).

The **kern format starts with a bunch of metadata:

!!!COM: Mozart, Wolfgang Amadeus

!!!CDT: 1756/01/27/-1791/12/05/

!!!CNT: German

!!!OTL: Piano Sonata No. 7 in C major

!!!SCT1: K<sup>1</sup> 309

!!!SCT2: K<sup>6</sup> 284b

!!!OMV: Mvmt. 1

!!!OMD: Allegro con spirito

!!!ODT: 1777///

**kern **kern **dynam

*staff2 *staff1 *staff1/2

*>[A,A,B] *>[A,A,B] *>[A,A,B]

*>norep[A,B] *>norep[A,B] *>norep[A,B]

*>A *>A *>A

*clefF4 *clefG2 *clefG2

*k[] *k[] *k[]

*C: *C: *C:

*met(c) *met(c) *met(c)

*M4/4 *M4/4 *M4/4

*MM160 *MM160 *MM160

=1- =1- =1-

....

After the structured metadata about the composer and the song (lines starting !), three staffs/voices are defined, the repeat schedule (ie. dc al coda), the key, the tempo, etc. The first staff starts with the line:

=1- =1- =1-In my first experiment, I stripped away only the ! lines and kept everything in the preamble. Since there is little training data of preamble, I found that I got mostly incorrect preambles. So then in order to have our model build solely on notes in the measure I choose to strip away the metadata, the preamble, and the numbers of the measures.

import glob

REP="@\n"

composers = ["mozart","beethoven"]

for composer in composers:

comp_txt = open(composer + ".txt","w")

ll = glob.glob(dir + "/ana-music/corpus/{composer}/*.krn".format(composer=composer))

for song in ll:

lines = open(song,"r").readlines()

out = []

found_first = False

for l in lines:

if l.startswith("="):

## new measure, replace the measure with the @ sign, not part of humdrum

out.append(REP)

found_first = True

continue

if not found_first:

## keep going until we find the end of the header and metadata

continue

if l.startswith("!"):

## ignore comments

continue

out.append(l)

comp_txt.writelines(out)

comp_txt.close()From this, I got two corpi: mozart.txt and beethoven.txt and was ready to train.

Learning

To learn, I stood up a small Ubuntu machine on terminal.com. (In case you don't know already, terminal.com is a user-friendly software layer on top of AWS where you can provision machines and dynamically change the effective size of the machine [CPUs and RAM]. There's also a GPU capability. This allows you to write code and test without burning serious cash until you're ready to crank.)

On my terminal, I installed Torch, nngraph, and optim. And then started training:

th train.lua -data_dir data/beethoven -rnn_size 128

-num_layers 3 -dropout 0.3

-eval_val_every 100

-checkpoint_dir cv/beethoven -gpuid -1

th train.lua -data_dir data/mozart -rnn_size 128 -num_layers 3

-dropout 0.05 -eval_val_every 100 \

-checkpoint_dir cv/mozart -gpuid -1

There were only 71 characters in preamble-less training sets. After seeing that it was working, I cranked up my system to faster machine (I couldn't get it to work after switching to GPU mode, which was my original intension...my guess it has something to do with the compiling of Torch without a GPU---I burned too many hours try to fix this so instead keep the training in non-GPU mode). And so, after about 18 hours it finished:

5159/5160 (epoch 29.994), train_loss = 0.47868490, grad/param norm = 4.2709

evaluating loss over split index 2

1/9...

2/9...

3/9...

4/9...

5/9...

6/9...

7/9...

8/9...

9/9...

saving checkpoint to cv/mozart/lm_lstm_epoch30.00_0.5468.t7

Note: I played only a little bit with dropout rates (for regularization) so there's obviously a lot more to try here.

Sampling with the model

Models having been built, now it was time to sample. Here's an example

th sample.lua cv/beethoven/lm_lstm_epoch12.53_0.6175.t7

-temperature 0.8 -seed 1 -primetext "@" \

-sample 1 -length 15000 -gpuid -1 > b5_0.8.txt

The -primetext @ basically says "start the measure". The -length 15000 requests 15k characters (a somewhat lengthy score). The -temperature 0.8 leads to a conservative exploration. The first two measures from the Mozart sample yeilds:

@ 8r 16cc#\LL . . 16ee\ . 8r 16cc#\ . . 16dd\JJ . 4r 16gg#\LL . . 16aa\ . . 16dd\ . . 16ff#\JJ) . . 16dd\LL . . 16gg'\ . . 16ee\ . . 16gg#\JJ . *clefF4 * * @ 8.GG#\L 16bb\LL . . 16ee\ . 8F#\ 16gg\ . . 16ee#\JJ . 8G\ 16ff#\LL . . 16bb\ . 8G#\ 16ff#\ . . 16gg#\ . 8G#\J 16ff#\ . . 16ccc#\JJ . @

!!! m1a.krn - josh bloom - AC mozart

**kern **kern **dynam

*staff2 *staff1 *staff1/2

*>[A,A,B,B] *>[A,A,B,B] *>[A,A,B,B]

*>norep[A,B] *>norep[A,B] *>norep[A,B]

*>A *>A *>A

*clefF4 *clefG2 *clefG2

*k[] *k[] *k[]

*C: *C: *C:

*M4/4 *M4/4 *M4/4

*met(c) *met(c) *met(c)

*MM80 *MM80 *MM80

...

== == ==

*- *- *-

EOF

and fixed up the measure numbering:

f = open("m1a.krn","r").readlines()

r = []

bar = 1

for l in f:

if l.startswith("@"):

if bar == 1:

r.append("=1-\t=1-\t=1-\n")

else:

r.append("={bar}\t={bar}\t={bar}\n".format(bar=bar))

bar += 1

else:

r.append(l)

open("m1a-bar.krn","w").writelines(r)

from music21 import *

m1 = converter.parse("m1a-bar.krn")

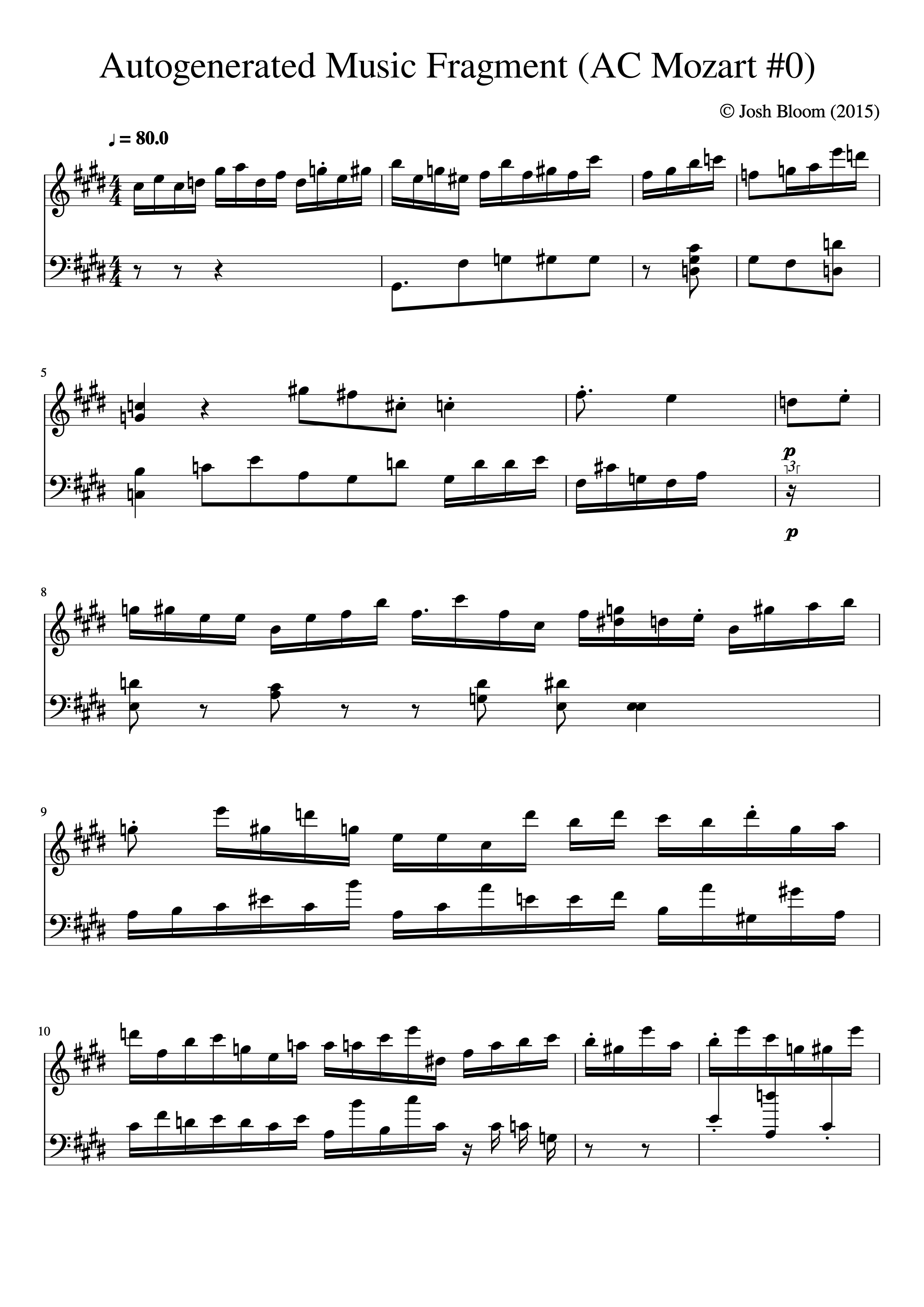

first of all—wow—this is read and accepted by music21 as valid music. I did else nothing to the notes themselves (I actually cannot write **kern so I can't cheat; in other scores I had to edit the parser in music21 to replace the seldom "unknown dynamic tags" with a rest).

m1.show("musicxml")

reveals the score:

I created a few different instantiations from Beethoven and Mozart (happy to send to anyone interested).

b5_0.8.txt = beethoven with temp 1 (sample = cv/beethoven/lm_lstm_epoch12.53_0.6175.t7)

b4.txt = beethoven with temp 1 (sample = cv/beethoven/lm_lstm_epoch12.53_0 .6175.t7)

b3.txt = beethoven with temp 1 (sample = cv/beethoven/lm_lstm_epoch24.40_0 .5743.t7)

b2.txt = beethoven with temp 1 (sample = cv/beethoven/lm_lstm_epoch30.00_0 .5574.t7)

b1.txt = beethoven with temp 0.95 (sample = cv/beethoven/lm_lstm_epoch30.00_0 .5574.t7)

Conclusions

This music does not sound all that good. But you listen to the music, to the very naive ear, it sounds like the phrasing of Mozart. There are rests, accelerations, and changing of intensity. But the chord progressions are wierd and the melody is far from memorable. Still, this is a whole lot better than a 1000 monkeys throwing darts at rolls of player piano tape.

My conslusion at this early stage is that RNNs+LSTM did a fairly decent job at learning the expression of a musical style via **kern but did a fairly poor job at learning anything about consonance. There's a bunch of possible directions to explore in the short term:

a) increase the dataset size

b) combine composers and genres

c) play with the hyperparameters of the model (how many layers? how much dropout? etc.)I'm excited to start engaging a music theory friend in this and hopefully will get to some non-trivial results (in all my spare time). This is the start obviously. Chappie want to learn more from the internet...

Edit 1: looks like a few others have started training RNNs on for music as well (link | link)

-Josh Bloom (Berkeley, June 2015)

Originally posted at wise.io/blog … see the archive.org link.